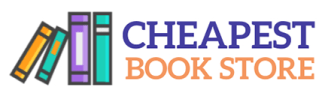

Many-Sorted Algebras for Deep Learning and Quantum Technology – 1st Edition

$180.00 Original price was: $180.00.$38.00Current price is: $38.00.

Author: Charles R. Giardina

Publisher: Morgan Kaufmann

Print ISBN: 9780443136979

Delivery Time: Within 4 hours

Copyright: 2024

500 in stock

- Save up to 60% by choosing our eBook

- High-quality PDF Format

- Lifetime & Offline Access

Many-Sorted Algebras for Deep Learning and Quantum Technology 1st Edition

Many-Sorted Algebras for Deep Learning and Quantum Technology, 1st Edition by Charles R. Giardina offers a clear, rigorous bridge between abstract algebraic methods and cutting-edge advances in machine learning and quantum computing. This authoritative volume introduces many-sorted algebra as a unifying mathematical language to model heterogeneous data types, layered architectures, and quantum-inspired operators—making complex systems easier to reason about, design, and verify.

Readers will find accessible explanations of core concepts, carefully developed notation, and concise proofs that illuminate how many-sorted structures capture both classical neural architectures and quantum information processes. The book emphasizes practical relevance: conceptual frameworks are paired with illustrative examples that demonstrate how algebraic thinking can simplify model composition, interoperability, and abstraction across disciplines.

Designed for researchers, graduate students, and industry practitioners in deep learning, quantum technology, computer science, and applied mathematics, this text supports cross-disciplinary work in academia and tech labs worldwide. Whether you’re developing robust neural systems, exploring quantum algorithms, or formalizing hybrid architectures, Giardina’s treatment provides tools to sharpen reasoning and accelerate innovation.

Engaging, scholarly, and forward-looking, Many-Sorted Algebras for Deep Learning and Quantum Technology is an essential reference for anyone serious about the mathematical foundations of modern computation. Add this 1st Edition to your library to deepen your theoretical toolkit and advance practical projects in machine learning and quantum computing—order your copy today.

Note: eBooks do not include supplementary materials such as CDs, access codes, etc.